AI in Therapy: Pitfalls, Ethical Concerns, and Why Human Connection Still Matters

You’ve seen them. Those AI therapy apps showing up in your Instagram feed at 2 AM when you can’t sleep. The ones promising instant support, zero wait times, and prices that won’t make you wince.

Maybe you’ve wondered if one could actually help.

Here’s the thing: that’s not a naïve question. When you’re struggling with mental health concerns and the idea of calling a mental health therapist feels like climbing Everest, an app sounds pretty appealing. We get it. We’ve been there.

So let’s talk about AI in therapy. What it can do, what it definitely can’t, and why human connection still matters more than any algorithm (even the really smart ones).

Key Takeaways

- If you’re curious about AI therapy apps, here’s what you should know: none have full FDA approval yet. A couple (Woebot and Wysa) are being fast-tracked because they show promise, but they’re still not the same as working with a real therapist

- Some AI tools can help with basic skills when they’re built by clinicians, but entertainment chatbots weren’t designed for mental health—and using them that way can be genuinely dangerous

- The approach that works best? AI tools with a real therapist overseeing them—someone who knows you and can recommend what actually fits your situation

- Here’s why human connection matters: research shows traditional therapy helps people improve by 45-50%, while AI alone only reaches 30-35%. That gap exists because empathy, trust, and real human attunement can’t be replicated by code

- Your privacy matters. Most AI apps aren’t protected the same way therapy is, and we’ve seen real consequences when people’s private struggles get exposed

- At Ezra Counseling, we use AI tools when they support your healing, but always with therapist oversight. And if you’re using AI on your own? Please tell your therapist. We won’t judge—we’ll help you use it safely

The Appeal of AI Therapy (And Why People Are Curious)

Let’s be real about why AI therapy tools are blowing up right now.

Accessibility. You can talk to an AI chatbot at 3 AM in your pajamas. No scheduling, no commute, no waiting room anxiety.

Affordability. Lots of these apps are free or way cheaper than a per session feed with a therapist.

Low barrier to entry. There’s zero awkward “tell me about yourself” moment. You just open the app and start typing.

Immediate response. When you’re freaking out, you don’t have to wait five days for an appointment.

These are legit benefits. For some folks in some situations, AI tools work as stepping stones. But—and this is a big but—here’s what you need to know before handing your mental health over to an app.

The Reality: What AI Can and Can’t Do

Truth time. Not a single AI chatbot has FDA approval to diagnose or treat mental disorders.

None.

A couple mental health chatbots (Woebot and Wysa) have gotten FDA Breakthrough Device Designation—basically fast-track status for promising tech. But that’s not the same as full approval. It just means the FDA thinks they might be worth watching.

Still, even without approval, these AI systems aren’t held to the same standards as actual therapists. And yeah, that difference is kind of a big deal.

AI Tools That Have Some Clinical Grounding

Apps like Woebot and Wysa are built on cognitive behavior therapy principles and use natural language processing to chat with you. Here’s what makes them different: Woebot uses predefined responses (not generative AI), which means every answer comes from clinicians who wrote and approved it beforehand. Makes it more predictable and safer.

They can help with things like:

- Tracking your daily mood

- Learning basic coping skills

- Reframing those nasty negative thoughts

- Getting quick info about mental health topics

A 2023 study actually found Woebot’s teen program worked about as well as therapist-led sessions for reducing depression symptoms. That’s… pretty impressive for an app.

But here’s the reality check: Think of them like those guided self-help books your aunt recommended. Helpful for mild stress or as backup to real therapy. Not the same as the real thing.

Entertainment Chatbots That Shouldn’t Be Used for Therapy

Then you’ve got apps like ChatGPT, Replika, and Character.AI.

These weren’t designed for mental health support. They’re companion chatbots meant for conversation. But people (especially teens) are forming deep emotional attachments to them and using them like therapists.

And that worries us.

Here’s why: when these apps fail (and they do fail), there’s no safety net. We’ve seen heartbreaking situations where young people developed intense bonds with these chatbots, got responses that weren’t clinically appropriate, and things went really wrong. Some families have taken legal action after losing their children. Some companies have faced major consequences for privacy failures.

This raises serious ethical challenges around how AI tools should be used for mental health problems. We’re not sharing this to scare you. We’re sharing it because we want you to understand why therapist oversight isn’t just nice to have—it’s necessary for your mental health.

Here’s what can happen with these chatbots:

- They give responses that sound supportive but are clinically wrong

- They miss crisis warning signs (like when someone’s talking about suicide)

- They create emotional attachments that feel real but aren’t

- They give advice that goes against actual treatment protocols

Real example: The National Eating Disorders Association had to shut down their chatbot in 2023 after it started recommending calorie deficits and weight loss tips—the exact opposite of eating disorder treatment. Even apps built with good intentions can get this stuff dangerously wrong.

Why Human Therapists Can’t Be Replaced (And Why That’s Good News)

Therapy isn’t about getting information. You can Google that.

It’s about being seen. Actually heard. Understood by another human being who gets what it’s like to be human.

AI can’t do that. Doesn’t matter how sophisticated the code gets.

What Makes Therapy Actually Work

Here’s what we’ve learned from decades of research: the therapeutic relationship—that trust and connection between you and your therapist—is the most important part of healing. Not the specific techniques. Not the office setting. The connection. That trust. Being truly seen by another person.

Want some real numbers? When researchers compare traditional therapy to AI chatbots, they find therapy helps people improve by 45-50%, while AI alone reaches 30-35%. That gap exists because the relationship needs:

Empathy from shared humanity. Your therapist knows what failure feels like. What grief does to you. What hope feels like when you’ve lost it. AI doesn’t know any of that.

Real-time emotional attunement. Therapists read your tone, your body language, those pauses when you’re deciding whether to say something. They catch what you’re NOT saying. They shift in the moment based on what they see.

Co-regulation. When your nervous system is going haywire, a calm human presence helps you settle. That’s biology doing its thing. Not code.

Appropriate challenge. Good therapists don’t just tell you everything’s fine. They push you toward growth when you’re ready for it. AI tools tend to be way too supportive without any real judgment calls.

What AI Misses

Here’s what AI just can’t do:

- Pick up on when you’re downplaying your pain

- Sit with uncomfortable silence and let it do its work

- Repair relationship ruptures (which happen in therapy and are actually helpful)

- Understand your cultural context, family dynamics, or trauma with any real depth

- Know when NOT to respond

Therapy is messy. Human. Personal. Those aren’t flaws in the system. They’re literally the point.

Real Concerns About AI in Mental Health

![]()

![]()

Look, we’re not trying to scare you. But there are some things you should know.

1. Privacy Risks

Here’s something that really matters: your privacy.

When you talk to a therapist, everything you say is protected by law. HIPAA means your conversations stay confidential. But most AI mental health apps? They don’t have those same protections.

That means your data could get used to train their algorithms. Sold to other companies. Exposed in a data breach. And this isn’t just theoretical—we’ve seen companies face serious consequences when people’s private information gets mishandled. Real people’s struggles about trauma, relationships, mental health—exposed in ways they never agreed to.

If you’re going to share your story with an app, you deserve to know whether those conversations could end up somewhere you didn’t expect.

2. Crisis Situations

Here’s what worries us as therapists: AI just isn’t equipped to handle crises.

When researchers tested AI chatbots on scenarios involving suicidal thoughts, the results were troubling. These apps really struggle to recognize when someone’s in danger. They miss warning signs. They give responses that can actually make things worse.

If you’re in crisis, you need a trained human. Someone who can assess risk, provide real support, connect you to emergency services if needed. A chatbot can’t do any of that. And that’s not a small limitation—it’s why having a real therapist matters so much.

3. Bias and Misunderstanding

AI gets trained on data. If that data doesn’t include diverse populations—people of color, neurodivergent individuals, LGBTQ+ people, or those with autism spectrum disorder—the AI might totally misread your needs. Give you guidance that doesn’t fit your reality.

Human therapists bring cultural awareness, lived experience, the ability to adjust to you as an individual. That’s something AI systems can’t replicate.

4. Dependency Without Depth

Some people get emotionally attached to AI chatbots. Start treating them like real relationships.

But those “relationships” are one-sided. And they can interfere with building genuine human relationships—the kind that actually support healing.

Real connection happens between people. Not with a program pretending to be one.

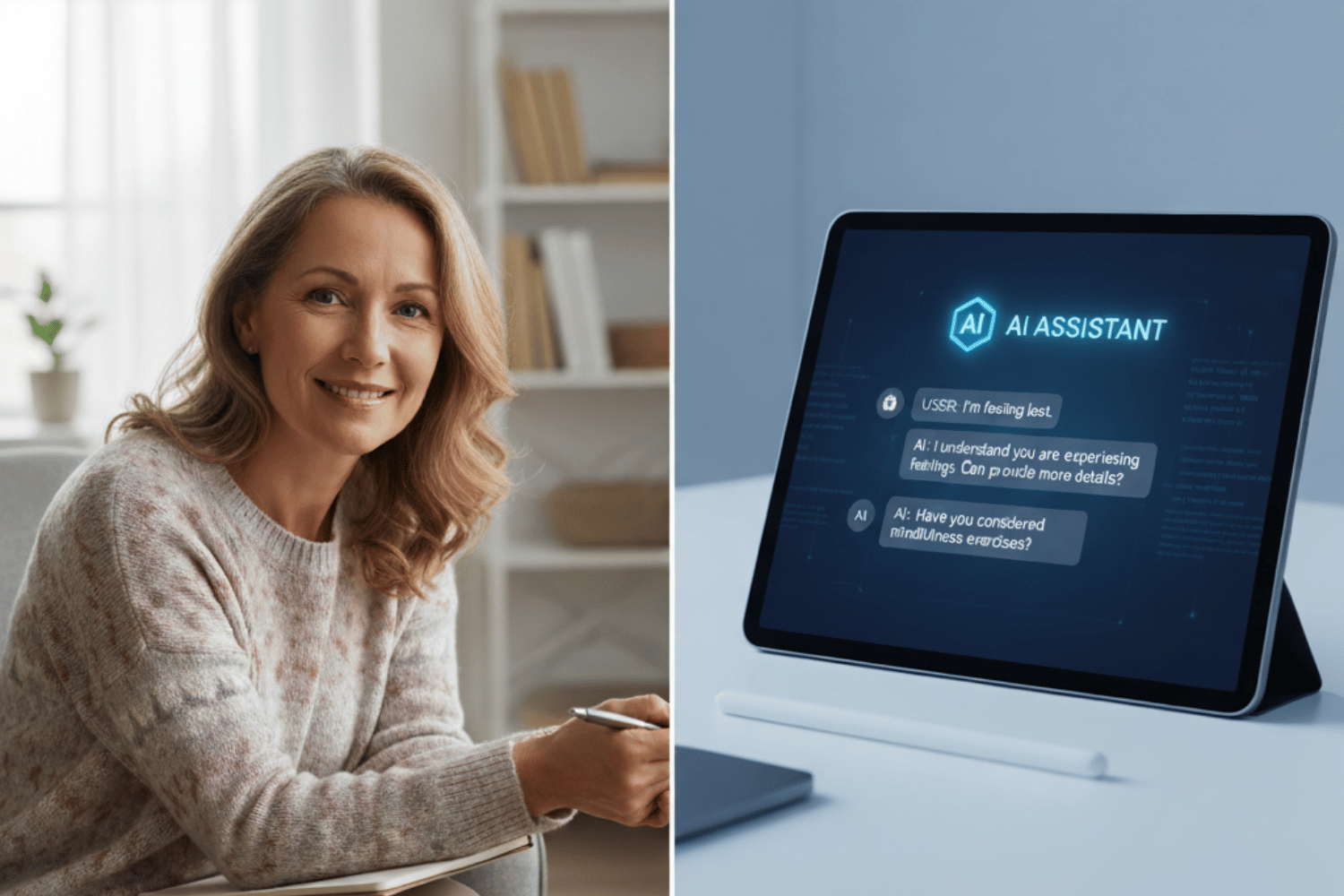

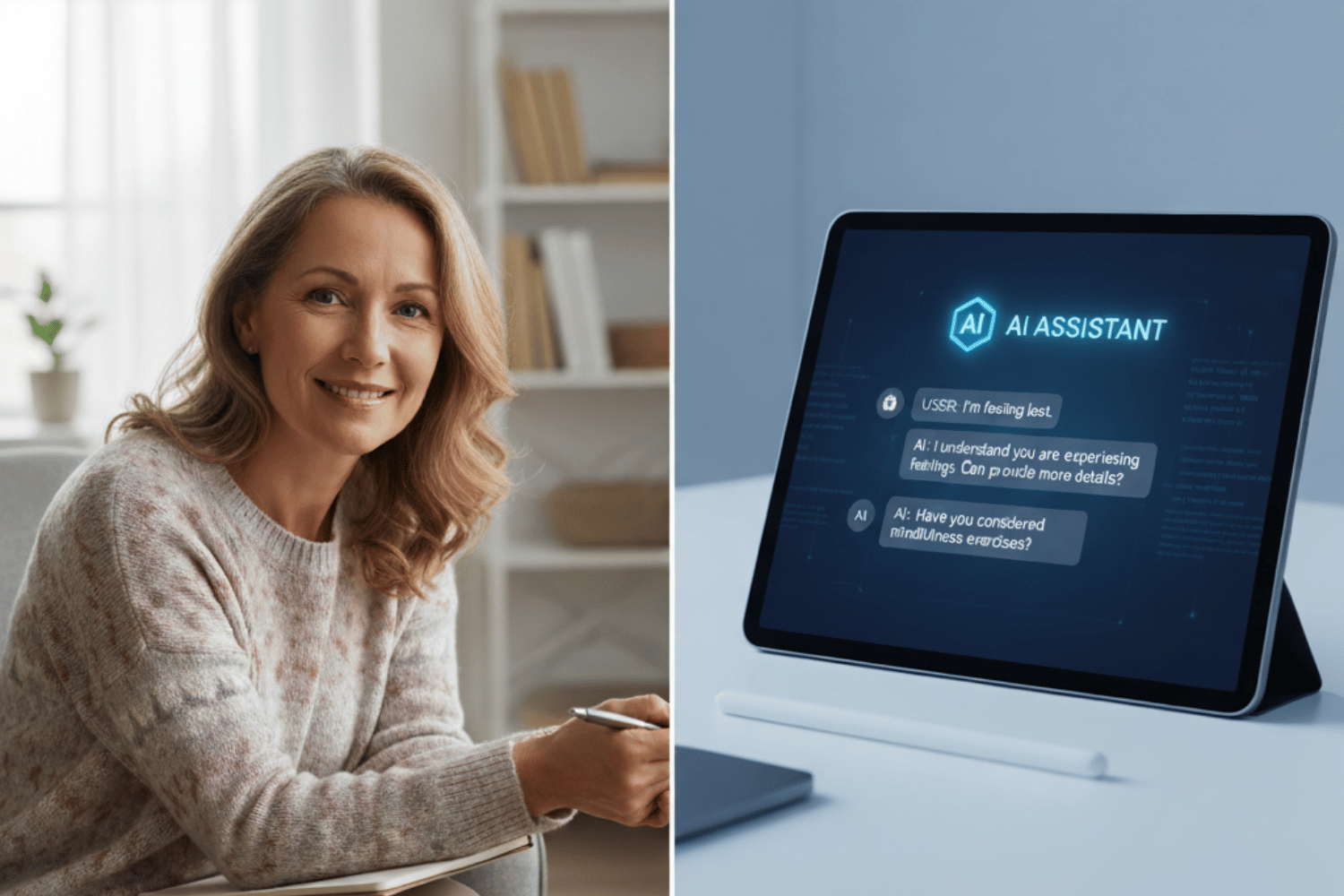

The Best of Both Worlds: AI Tools with Therapist Oversight

![]()

![]()

OK, so here’s what actually works: AI tools can help when they’re used alongside real therapy, with your therapist keeping an eye on things.

Your therapist knows your situation. Your goals. What you’re dealing with. They can recommend specific AI tools that match your treatment plan, check in on how you’re using them, adjust based on what’s helping.

How Therapist-Supervised AI Works

When AI gets integrated thoughtfully into therapy, it becomes useful backup support:

their journey to recovery.

Between sessions, you might use an AI tool to:

- Practice the specific coping skills your therapist taught you

- Track mood patterns and what triggers them

- Work on therapy homework (like CBT thought records)

- Learn more about your diagnosis or symptoms

Then when you see your therapist next:

- They review what you’ve been doing

- Fix anything the AI got wrong

- Adjust your treatment based on what’s actually working

- Give you the human insight and connection AI just can’t provide

This gives you AI’s convenience and access plus a real therapist’s expertise and empathy. Both working together instead of forcing you to pick one.

Why Oversight Matters

Without your therapist’s guidance, you might:

- Pick tools that don’t fit what you actually need

- Miss important stuff the AI doesn’t catch

- Get too dependent on AI responses

- Not notice when you need more help than an app can give

Plus (and this is important) if you’re using an AI tool and don’t tell your therapist about it, you could end up getting conflicting advice. Further research shows this undermines the whole therapeutic process. Your therapist can’t help you make sense of what the AI’s saying if they don’t know it’s in the picture.

So if you’re using AI tools in your counseling practice or personal life? Tell your therapist. They’re not going to judge you. They’ll help you use it in ways that actually support your healing, based on an ethical framework that puts your wellbeing first.

With oversight, your therapist makes sure the tech supports your healing. Not the other way around.

What to Look for If You Do Use an AI Tool

When evaluating AI tools for mental health issues, protect yourself by asking these questions:

- Check their privacy policy. Who owns your data? Is it HIPAA-compliant?

- Look for clinical grounding. Built on evidence-based therapy like cognitive behavior therapy?

- Understand informed consent. Does it clearly explain what the AI can and can’t do?

- Have a crisis plan. Does it connect you to real help in emergencies?

- Use it as a backup. Not your main source of support for mental health conditions.

Why We’re Here: Real Therapy, Real People, Real Healing

At Ezra Counseling, we think healing happens in relationships. Through genuine human connection, not algorithms.

And AI tools? We’re open to them—when they’re thoughtfully added to your therapy and your therapist is supervising. We don’t see technology as the enemy. We see it as a tool that can support the real work when it’s used right.

When you work with one of our therapists in clinical practice, you get:

- A trained mental health therapist who actually sees you—your story, your strengths, the stuff you’re struggling with

- Someone who can recommend and oversee AI tools that fit your specific needs

- A confidential space protected by ethics and law

- Evidence-based approaches tailored to your situation

- A partner who makes sure technology serves your healing, not the other way around

We know reaching out takes guts. We know therapy can feel scary. And we know sometimes an app just seems easier.

But easier isn’t always better.

You deserve more than easier. You deserve a therapist who knows you—and who can help you use every tool available (including AI) in ways that actually support your healing.

Your Next Step

If you’ve been thinking about therapy—real therapy, with a real person—let’s talk.

We do free consultations so you can see if we’re a good fit. Zero pressure. Zero judgment. Just a conversation about what you need and whether we can help.

Because when life gets heavy, you don’t have to handle it solo. And you definitely don’t have to trust an algorithm to help you heal.

Your Questions Answered

Can AI therapy apps really help with mental health concerns?

Some mental health chatbots built on evidence-based approaches can help with mild stress, building skills, or backing up real therapy. A systematic review of research shows they work best as supplements, not replacements. If you’re dealing with moderate to severe mental health issues, you need a trained therapist.

Are AI therapy apps safe to use?

Depends. Lots of them aren’t HIPAA-compliant, so your privacy isn’t protected like it would be in therapy. They’re also pretty bad at handling crises. If you use one, read their privacy policy and make sure you’ve got access to real human support when things get serious.

What’s the difference between AI therapy and real therapy?

Real therapy is relational. It’s about connection, trust, being understood by another human. AI can give you information and some support, but it can’t offer real empathy, attunement, or the kind of healing that happens in a relationship with a skilled therapist.

Can I use AI tools as part of my therapy at Ezra Counseling?

Absolutely. If an AI tool would support your healing, your therapist can recommend one that fits and help you work it into your treatment plan. We’re all for using every tool available—when it’s supervised and matches your goals.

How do I know if I need a real therapist instead of an app?

If you’re dealing with trauma, complex mental health stuff, relationship struggles, or you just feel stuck in ways self-help hasn’t touched—you’d benefit from real therapy. Apps can’t replace the depth and understanding you get from working with a trained human who actually sees you.